After more than a year in lockdown, and going through a range of emotions, everyone must have thought about the importance of emotions at least once. Reading emotions and understanding how the other person feels play a huge role in communication. Therefore it only makes sense that AI systems should be capable of doing this too, for them to be of better use. This is where artificial emotional intelligence comes to play.

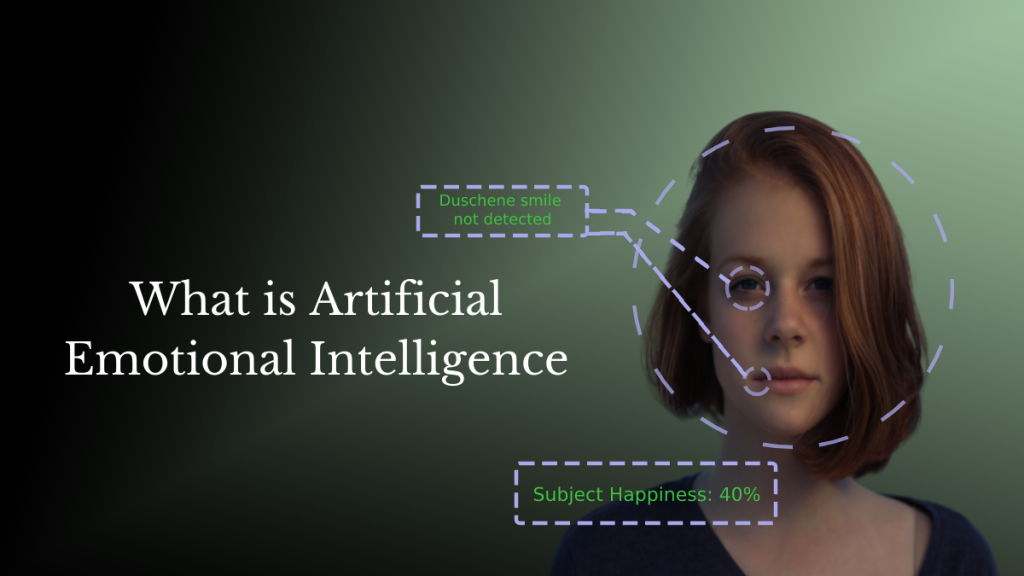

Artificial emotional intelligence is the ability of machines or AI systems to read human emotions. They include reading facial cues, expressions, gestures, tone of voice, and anything and everything that humans used to convey emotion. As you can imagine, better artificial emotional intelligence can lead to better interaction between humans and AI systems. You won’t have to plan out a question in your head before you ask Alexa for help, it will be just like asking your friend.

Artificial emotional intelligence is closely related to the field of affective computing. In fact, the origins of the field of emotional AI or emotion AI can be traced back to a pioneering paper by Rosalind Picard in 1995. Rosalind Picard is credited with beginning the field of artificial emotional intelligence and affective computing. Affective computing is related to the study and development of systems and devices that can recognise, interpret, simulate human effects (emotions).

Applications of artificial emotional intelligence

Artificial emotional intelligence is not for smarter digital assistants alone, they have a whole host of other applications.

Better driverless cars

The Indian meme community went absolutely wild when Tesla announced they were coming to India. How can Tesla autopilot hope to navigate the wild west (or wild east) of driving? Apart from concerns about the road infrastructure, how was a driverless car going to communicate with other drivers? Driving on narrow rural roads and driving in the city requires understanding subtle expressions and gestures drivers use to communicate their intentions to other drivers.

Even globally, at least until driverless cars are the norm, algorithms have to understand the intentions of other drivers, as well as pedestrians. Artificial emotional intelligence can play a role.

Even before driverless cars, cameras and systems are used to detect driver fatigue. In cars with level 2 or level 3, self-driving tech drivers are monitored often with eye-tracking to ensure that drivers are paying attention to the road even as the car drives itself. And even cars without autonomous features, from Hyundai to Audi, is offering such features.

Some of them use a camera to monitor if the driver is staying in lane, or monitor the driver’s face, while some of them rely on input from the steering wheel. Some systems like Mazda’s driver attention system learns driving patterns in the beginning of the ride and based on this sends warnings if the driving patterns exceed certain thresholds in later stages.

Automotive AI startup Affectiva uses a set of cameras to monitor the cabin as a whole. Some of their main features include monitoring the attentiveness of the driver, and monitoring the passengers for comfort levels and safety. Their system can read human emotions by careful analysis of the face. Systems like these can improve safety and comfort, now, and in the future.

Artificial emotional intelligence for mental health

Identifying a patient’s emotions, identifying what triggers it is one of the first steps in learning to cope with the emotion (FYI, this is probably an oversimplification). But oftentimes we find it difficult to understand our own emotions. Or find it difficult to explain it, so as to seek help. AI systems that can understand emotions can help provide better care for patients.

For example, WeUnlearn, a non-profit based in India working towards better mental health care has developed a chatbot for adolescents. The chatbot named Wulu classifies teens into high/medium/low risk based on a survey and help equip them with soft skills. The chatbot reiterates the role artificial intelligence can play in mental health care. Now imagine if the system could actually read human emotions and react to them.

MIT Media Lab has created a wearable device that responds to human emotions. The device reads heart rate and breathing rate by capturing chest vibrations and releases soothing scents to the user. The device can help users calm down and help cope with emotions.

Such devices that assist users by predicting or detecting panic attacks or anxiety attacks can be very useful and could become ubiquitous in the future.

Applications of artificial emotional intelligence in marketing/user testing

Before releasing a promo, or a poster, or before starting a campaign, testing it in front of a small group of the target audience is an important step in the process. It can show how the audience reacts, if it is eliciting the response you want to create, and can predict how successful a campaign will be.

The problem with the current process is that it relies a lot on surveys filled out by the audience. And surveys don’t always reflect how the audience actually feels. Anyone who has ever asked their friend their thoughts on a work of art they made knows this. You can never really tell if they liked it or not based on what they say alone. Even if they’re not your friends, “honest feedback” is a tricky thing.

Here’s where AI that can read emotions come into play. The above-mentioned startup, Affectiva has a media analytics solution that does exactly this. Their solution can measure the responses of the test audience as well as the actual audience of marketing campaigns. Marketers don’t have to rely on surveys, but instead can measure the actual reaction and see if their campaign will have the expected results or not.

We can picture similar applications for user-testing as well. Often during product testing, users may, for example, report that they like a particular product feature, under survey conditions. But under real-world usage, after prolonged usage, the experience may be different. AI that can read emotions can make the designers aware of this.

Other applications

As discussed above, we can expect more natural interactions with digital assistants. In fact, this may actually create an AI that can beat the Turing Test. (Maybe?).

Another application is in customer support. We’ve all been in one of those situations where a machine answers a customer care support number and annoy us like anything before they finally put us through to an actual human being. And better customer care is not just about answering questions. It’s about making the customer feel prioritized, making them feel understood. And if the customer support executives can get a heads up on the customer’s emotional state, they would find that useful.

Cogito, an AI startup does this, and more. Through their system, they literally coach your customer support executives in real-time as they talk to the customers. By analysing the call in real-time, the system gives prompts to the executive towards the next step.

Robust artificial emotional intelligence systems can create better lie detectors. In some ways, the existing systems are rudimentary emotional analysis systems. They measure skin conductance, heart rate, breathing rate, etc to see if someone is lying. Despite their widespread usage, these systems are notorious for their inaccuracy.

Research with professionals, such as FBI agents, secret service agents, CIA agents who are highly skilled at telling if someone is lying or not have shown the importance of microexpressions. These are tiny expressions, that last for barely a second that happens if someone is lying. Poker players are familiar with “tells” that a person does when they’re bluffing. Mentalists are really good at spotting these microexpressions.

As you can imagine, a system that can read emotions, analysing a person’s face in real-time can create a better lie detector.

Artificial emotional intelligence: cause for concern?

As with any form of AI, emotional AI or emotion AI systems are going to be only as good as the training data. Garbage in garbage out. AI researchers may pass on their biases to their AI systems(Like father, like…). And this can have disastrous consequences.

People from many ethnicities are often wrongfully prejudiced or stereotyped as too aggressive or having a quick temper. If the training data reflects these prejudices, inevitably, these systems will classify members of a community with these behaviours. The bias can cause members of a community to be labelled as aggressive. And this will cause systematic discrimination wherever it is used.

Research has shown that there is a widespread assumption in the medical community that black people feel less pain compared to the white population. Doctors tend to prescribe lower doses of pain medication to black people due to this reason. An emotional AI that can gauge pain can substantially improve pain management. But if the same prejudices seep into these AI systems, you can only imagine the consequences.

Privacy is another question with artificial emotional intelligence. A person’s emotion is something innately private, almost like their thoughts. Systems that can read your emotions, and sell you things based on that, that would be a whole other privacy issue thing than what social media networks or search engines are doing.

Recently, police from the Indian state of Uttar Pradesh announced plans to roll out facial recognition systems that track women for signs of distress. The idea was to keep women safe from potential perpetrators. Activists slammed the move over privacy concerns.