Anyone who has read anything about AI would have probably heard of the Turing Test. It’s a test devised by the mathematician and cryptographer Alan Turing who is often credited with pioneering the idea of artificial intelligence. The purpose of the test was to determine if a machine was intelligent or not.

What is the Turing Test?

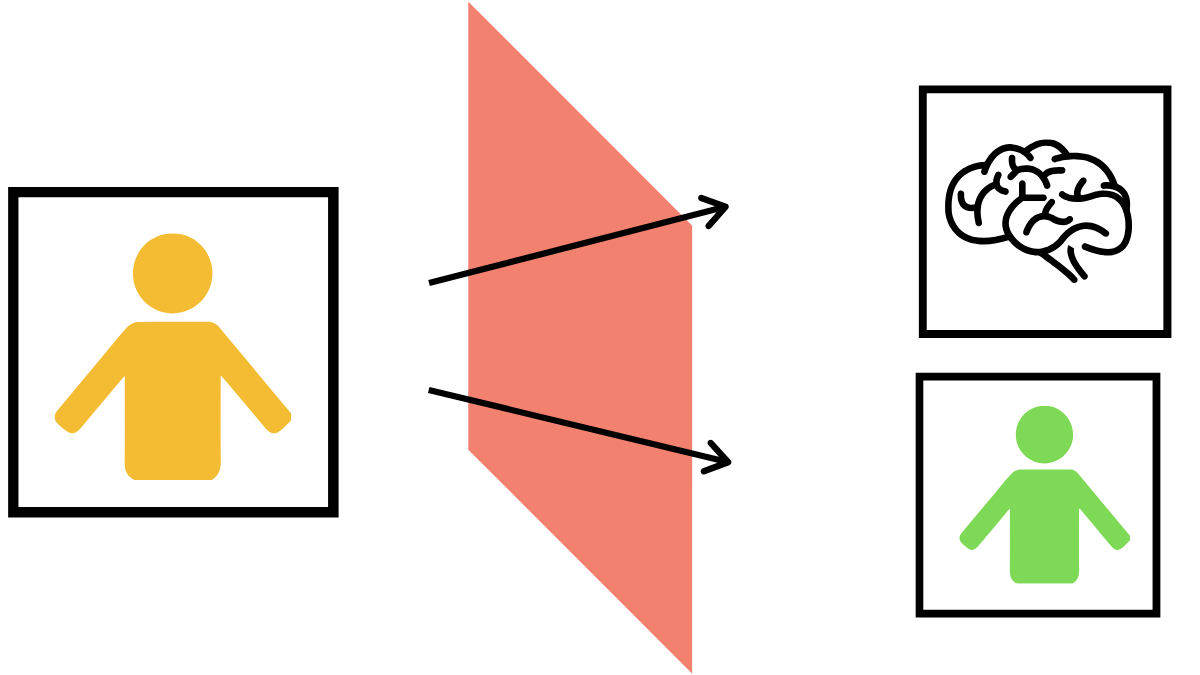

So for the uninitiated, the Turing test consisted of 3 participants – two humans and the computer that is to be tested. They are all separated from each other. One of the humans is the evaluator. The evaluator communicates to both but does not know who he is talking to, the computer or the human. At the end of the test, if the evaluator is not able to which is the computer and which is the human, the computer is supposed to have passed the test and exhibits intelligent behavior equivalent to that of a human being.

The test has developed many variations, the most popular being the reverse Turing test or the CAPTCHA, where humans try to convince a computer that they’re humans and not another computer. There is a variant which allowed only true/false answers, and another variant called Total Turing Test, which would test the ability of a computer to perceive objects and manipulate it(Ask an AI with a robotic arm to pick up the red fruit from a basket or something like that).

Intelligence

Efforts to quantify intelligence have not resulted in one clear test. The most popular test measuring intelligence, the IQ test measures only logical and mathematical ability and is flawed on many levels. It is not even possible to define intelligence as it differs from species to species and from person to person. If you consider intelligence as the ability of a species to survive, tardigrades may be the most intelligent creatures on the planet. And the type of intelligence everyone possesses varies widely. The Internet is full of stories about successful eccentric CEOs who do not perform well socially. So to determine if a machine is intelligent, it could be much more difficult.

Here the Turing test provides a simple solution – compare it with another human. If a machine could communicate like a human, it passed the test and was deemed intelligent. The test was very simple to define and actually served the purpose for which it was intended. The test was to determine how a human machine can appear. This meant that the machine did not have to give correct answers to a question but give answers that were human.

Why it may not be ideal

Of course, the simplicity of the test also meant widely overlooking many other factors. For example, the level of intelligence of the humans involved in the test mattered. For example, for a layperson who is not familiar with chatbots may be convinced that they are actually talking to a human. In 1966, Joseph Weizenbaum created a program that acted like a Rogerian Psychotherapist called ELIZA. Upon communicating with the system, it was difficult to convince some subjects that they were not actually talking to a human. But the system was simple and did not actually understand the conversation at all. The system basically searched for some keywords and gave prescripted replies based on the keywords. Another system PARRY, developed by Kenneth Colby acted like a paranoid schizophrenic and was able to fool 33 psychiatrists. A program called Eugene Goostman, in 2014 was able to convince many that it was a 13-year-old Ukrainian boy.

Initially all three above may appear to have beaten the Turing Test, but their characters, or the people they were pretending to be, gave the programs a lot of leeways. ELIZA didn’t have to pretend to be an average human, it just had to act like a psychotherapist in an initial meeting. PARRy’s responses only had to sound like that of a paranoid schizophrenic, albeit to a group of psychiatrists. Same goes for Eugene Goostman, answers that sounded unlike that of an adult human would be ok as it is assumed that they are talking to a kid. The point is that, the systems defeated the Turing Test, albeit in a very limited way.

There are also other drawbacks to the test, one of them being the interrogators. As in the case of ELIZA, people who are not aware of artificial intelligence systems or people who don’t know that they may be talking to one may mistake a machine for a human, but someone who has knowledge about similar systems and has been informed of the possibility may be able to spot the machine easily. While Alan Turing does define an interrogator as someone who is not likely to spot a machine within 5 mins, he doesn’t mention the level of knowledge the person has to possess.

Even if a system doesn’t pass the Turing Test, it doesn’t mean that the system is not intelligent. Some AI systems are designed for particular tasks, such as playing chess or driving cars, and not for conversing with humans. In fact, systems are more intelligent than humans may actually fail the test because humans make mistakes and are not able to do some tasks. So if someone asks an AI system to solve a Rubik’s cube and the machine does it faster than an average person, the machine may be spotted. Many machines learning systems that are in existence today, and provide information better than humans, may not pass the Turing Test. AI chess players may not pass the Turing test. When I write this I feel I’m consoling an AI that just failed the Turing Test despite being smart.

Does it matter if an AI system has passed the Turing Test?

Now there are questions on whether the Turing Test serves any real purpose. From my understanding, a system that can actually understand a question, instead of searching for keywords is more or less like an advanced search engine. They will be useful in developing chatbots and personal assistants( I’d like one JARVIS please, thank you very much). A system that can understand the information presented to it, for example, read a Wikipedia page about a given topic and answer questions (actually answer it, not present or read out the paragraph that contains the answer) will have real-world applications( and may even be more intelligent than what Indian educational system aims for).

There is a popular thought experiment, referred to as the Chinese room proposed by John Searle, to show that the Turing Test cannot be used to determine if a system is intelligent or not. The idea is that a system can simulate intelligence without being intelligent. I’m not sure which side of the debate I stand on. The Chinese Room thought experiment is one of the biggest arguments against the Turing Test. John Pearle suggested that if you provide a person who doesn’t understand Chinese a set of instructions to translate English to Chinese, the person will be able to translate it, but it won’t mean that the person understands it. Like that, a machine may be able to mimic consciousness, but not have consciousness. An AI that with actual intelligence, which actually understands the instructions and the replies it gives is called a strong AI. According to John Pearle a strong AI is not possible with conventional elements in computing.

While similar tests have been suggested for other aspects of AI, the classical turing test applies more or less for chatbots, or conversational AI. IoT systems combined with IoT systems are becoming popular in homes and an important part of them is the digital assistants used to interact with them. As digital assistants become more and more common, people have been asking whether it’s even desirable for AI to be human like. While being able to get information by simply asking an AI seems great, there have been suggestions to ensure that humans who speak to the AI know they are not speaking to a human. For example while using Google Duplex, the system will announce that it is a robot and not a human.

I found the Turing test to be intriguing. I feel like it raises some questions. If we do develop an artificial intelligence system, that is exactly like a human being, how will that affect our society? A key characteristic of a system that truly beats the Turing test would be its ability to understand and perceive emotions. For that, I think, the system will have to feel those emotions. And essentially, be human. Is it ethical to program such a system to carry out tasks? Humans have long treated fellow human in a subhuman manner because they look differently or speak a different language. In such a situation, is it even ethical to create a system like that? If we combine the intelligence of such a system, which can perceive and understand the world around it, with the brute computing power offered by its hardware, what will be the possible implications? Yes, I am a big fan of Person of Interest.

I can imagine a well-designed Turing test coming real handy when Skynet comes online.